This article has been a long time coming. Way back before the first New Bedford Kickstarter in 2014, I was starting to wrap up the expansions for New Bedford (now collected in Rising Tides). I had noticed a real uptick in the number of “solo variants” for games I followed on BGG, so I started to think that people were going to want a solo variant for New Bedford. But it would be another year of work before I actually got a solo mode I was happy with. In the roughly two years since I started working on the solo mode, a lot of new resources have appeared to assist designers of solo games, and I think it’s helpful to talk about how the Lonely Ocean mode was developed with regard to some of these resources.

When I started, I hadn’t read much about other designers’ approaches to solo games. I only had a few points of reference. Among the most well studied and popular was Agricola‘s solo mode. Over several games, players try to beat their previous score, with some adjustments and requirements that change from game to game in a multi-game campaign. At it’s heart, you’re just aiming for a high score. And while that creates some good decisions that use the core mechanics, it’s really just an exercise in optimizing play against a limited number of random options. And with a known set of upgrades possible, the community around Agricola solo play had optimized that engine, turning it into a puzzle.

Morton Monrad Pedersen, has designed a number of “Automa” solo modes for Stonemaier Games, and has written a number of excellent posts on solo game design. And if you only read one, read this overview. From the first paragraph outside the introduction:

The philosophy behind my approach is that playing the solo version of game should feel like playing the multiplayer game. This doesn’t mean that every detail should be the same, but the soul of the game should be intact. Thus you should strive to avoid cutting out important parts of the game, and the solo player should face roughly the same choices and the same win-lose criteria as the multi-players.

That really captures my own feelings, although I didn’t find his blog on BGG until after the solo mode was 99% complete. [Not knowing about that blog is a big reason I want to share my own experience.]

So while I could have had players play simply for high score, that isn’t at all what I wanted to do with Lonely Ocean. New Bedford depends on several layers of interaction between players. And to me, a game without those layers just wouldn’t be New Bedford. That was my first major design decision. What I really wanted was to simulate the gameplay of a multi-player game by adding AI opponents. In fact, my vision was not just that you could play a solo game, but that two players could add in an extra bot and play a larger game. This is really an extension of the importance I place on interaction in my design philosophy.

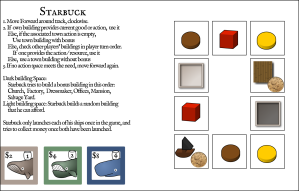

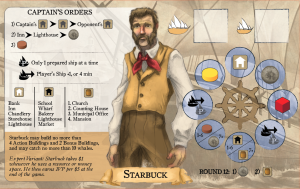

To actually capture not just the feel of a multiplayer game, I needed to figure out what sort of decisions players were making. There were several defining elements I identified as the New Bedford experience: Blocking and non-blocking spaces, publicly available buildings, jockeying for best choice of whales, and planning for your ships to return. This suggests several functions I will need a good AI bot to perform: choosing action spaces to use, gathering resources and building buildings, launching and tracking ships, and collecting money.

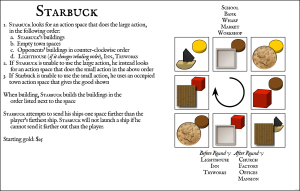

Because actions in New Bedford generally progress in a logical order, it wouldn’t make any sense to take a lot of them in random order. To keep the AI from getting too complex, I went with a simple rondel with the basic actions on them, in the order a player would naturally take them in. The rondel also gave me an opp ortunity to make add more variety and replayability by creating multple AI “personalities” and with different goals and strategy. [I discussed this more in depth in my Notes from New Bedford, so I won’t go over it again here.]

When playing against a human opponent, you have imperfect ability to predict what the opponent will do at any given time. However, if the bot simply advances around the rondel, you always know what it will do, and you can always win by blocking it. That effectively creates a degenerate strategy that eliminates the player’s decisions. On the other side, if you can’t predict the bot’s actions at all (i.e. they are completely random) it completely removes the aspect of trying to out-think your opponent. So the real key is developing a method to let the AI vary actions in a way that simulates a player making choices.

I looked to another favorite game that uses a bot to simulate an extra player: Glen More. In that game, a biased die moves the bot worker around the rondel, removing buildings. A biased die gives that great balance of having a good idea of what the bot was going to do next, without knowing perfectly. This recreates the player’s decision process from a multi-player game, where you have to evaluate the risk associated with several possible moves the opponent might make. By supplementing the rondel with a simple decision tree to determine the specifics of each action, it creates a flexible system where the same space on the rondel doesn’t get evaluated the same way every time. It also makes it difficult to see many turns into the future, since the possible outcomes change the state of the game.

This two-tiered system created a good turn-by-turn simulation of a player, but it did not do a great job of simulating how a player’s strategy shifts over the course of a game. The flow of the game typically involves building action buildings and launching ships early, and collecting money to pay for whales and building bonus buildings late. Early tests of the AI only did this occasionally, by chance. That meant I needed something much more robust that could react to the game.

I ended up achieving this using two methods. First, a few of the spaces on the rondels change actions after a certain round (e.g. switching from building a normal building in the first half of the game to building a bonus building in the second half of the game). This explicitly changes the bot behavior. The second way I made the AI adapt is more integrated into the gameplay. The decision tree defaults to simple actions if it can’t find a more complex one. As the game develops, more of these complex actions become available, so the end result of the decision tree changes without requiring a complete evaluation of the game state (i.e. scores, number of buildings, resources, and the other player’s behavior) every turn. Each possible action is simply evaluated as “Can the bot use this building? Y/N”. You don’t want players working harder to figure out the AI’s turn than they do figuring out their own move, so it is really important to minimize the effort required for the player to determine the AI’s actions.

Minimizing the effort also depends on the amount of accounting needed in a solo variant. Since a solo variant frequently adds something to the game to manage the AI, or has the player doing multiple players worth, games that aren’t “fiddly” can easily become so in a solo variant. This is a reason to do everything to minimize accounting required for the AI. This keeps the amount of time the player is actually playing close to the real game. Anything you can pre-load before the game starts keeps that down time between decisions low. For Lonely Ocean, this meant several changes. I [eventually] eliminated coins and resources for the AI, and randomized the buildings at the start of the game, to eliminate tracking that information or performing the randomization later.

The reason I was able to make this work is the last great secret of solo variant design. Let the AI cheat. This opens up a lot of space to manage things that would normally be difficult to deal with. In New Bedford, carefully managing money for your ships is one of the core conflicts. In early tests, the AI would sometimes run away with too much money, turning it into points at a poor rate; other times it would completely skip collecting money when it needed it, in a way that no other player would. But the AI is sort of a black box, and as long as its actions simulate a human opponent, you don’t need to duplicate the actual decision process. An AI that cheats by ignoring money acts like a player who perfectly manages money. The trick is to balance the cheating with inefficiencies in the AI system.

Without collecting resources or money, the AI frequently acts only to block action spaces the player wants to use. But it does it “fairly” in a way that supports its own “strategy”. It never feels like the AI is blocking you just to block you (except maybe the mad captain Ahab). Through testing, I also added some hard limits on how much the AI can cheat. If it runs away with points early, the AI will run out of things to do, and be left blocking even more spaces for the player.

The late game—particularly the last round—is a critical time, and deserved some special consideration. The normal decision process breaks down as players try to finish their plans. For the AI to accomplish the same thing, I added special handling for the last round of actions. Don’t be afraid to handle special circumstances with special rules.

Finally, some thoughts about winning. For Lonely Ocean, I wanted players to be competing directly against the AI, so that the AI could actually win, not just stop the player from winning. Solo games share a lot with cooperative games, in that players are pitted against the deck, and everyone (i.e. the one person in a solo game) wins or loses together. In this 2013 interview designer Matt Leacock mentions a theoretical ideal loss rate of about 70%. This thread on BGG suggests most players want a loss rate of between 75% and 90%. I couldn’t find much specific to solo-gaming, but this post supports the a similar conclusion. And [to engineer it a bit] if it were a 3, 4, or 5 player game, any given player would be expected to lose 67%, 75%, and 80% of the time (respectively) against equally skilled players. Since we’re simulating the multiplayer experience, this suggests a comparable win rate. I think this is more about personal preference, but it is also good practice to give the AI flexibility for the player to make it easier or harder. In Lonely Ocean you can do this by tweaking a few of the limits and parameters for the AI.

So, to sum up these lessons

- Simulate the gameplay of a multi-player game by adding AI opponents

- Add more variety and replayability, by creating multple AI “personalities”

- Let the AI vary actions in a way that simulates a player making choices

- Recreate the player’s decision process from a multi-player game

- Minimize the effort required for the player to determine the AI’s actions

- Let the AI cheat; balance the cheating with inefficiencies in the AI system

- Handle special circumstances with special rules.

- Give the AI flexibility for the player to make it easier or harder

I want to thank Mike Mullins, solo gamer and designer extraordinaire, for helping me develop and test Lonely Ocean, and without whom its creation would not have been possible. I had been working on it for a year, and it was close to being ready, but [and this is a little behind the scenes glimpse of the campaign] I still wasn’t absolutely sure the Lonely Ocean would work at the time the second Kickstarter campaign launched. But we solved the last few issues just in time to make it into the campaign as a stretch goal. It still took another several months of testing and refining.

This process has really led me to discover a number of design strategies for developing my AI. And I hope my experience can be helpful to other designers. Solo variant design has really progressed a lot in the past few years. In my research, it seems like more and more designers are honing in on these same basic concepts to establish guidelines for solo variant design. Certainly every game doesn’t need to follow these, and there are innumerable ways a designer can accomplish them. But I think these establish a good baseline for what a solo variant can accomplish. You may be playing alone, but you’re not designing alone, and there are a lot of great resources to help designers create a great solo variant.

Additional Resources

From “Automa” designer Morton Monrad Pedersen:

- Blog on BGG

- Automa Overview

- AI Personality design

- Motivation for making a solo variant on Stonemaier Games blog

AI development example for Campaign Trail on League of Gamemakers

AI Development Example from Lucid Phoenix Games [Ed note: corrected link]

1 Player Guild on BGG

From solo gamer Willie Hung:

- What players want in a solo variant on Daniel Solis’ Blog

- Why players play solo games

- More reasons players play solo games

From solo gamer and solo variant designer Mike Mullins